Human Misalignment

An immediate danger from AI: getting what we want?

I have very mixed feelings about AI. I find it fun to play around with, and sometimes useful. Uncertainty about future developments (and their social effects) feels extremely unsettling. But much current discourse on the topic is pretty frustrating, simultaneously dismissively neglecting the significance of worst-case scenarios while exaggerating silly objections in order to push for blanket ideological taboos. I’d like to see more discerning takes that seek to explicitly distinguish promising uses of AI from problematic ones.

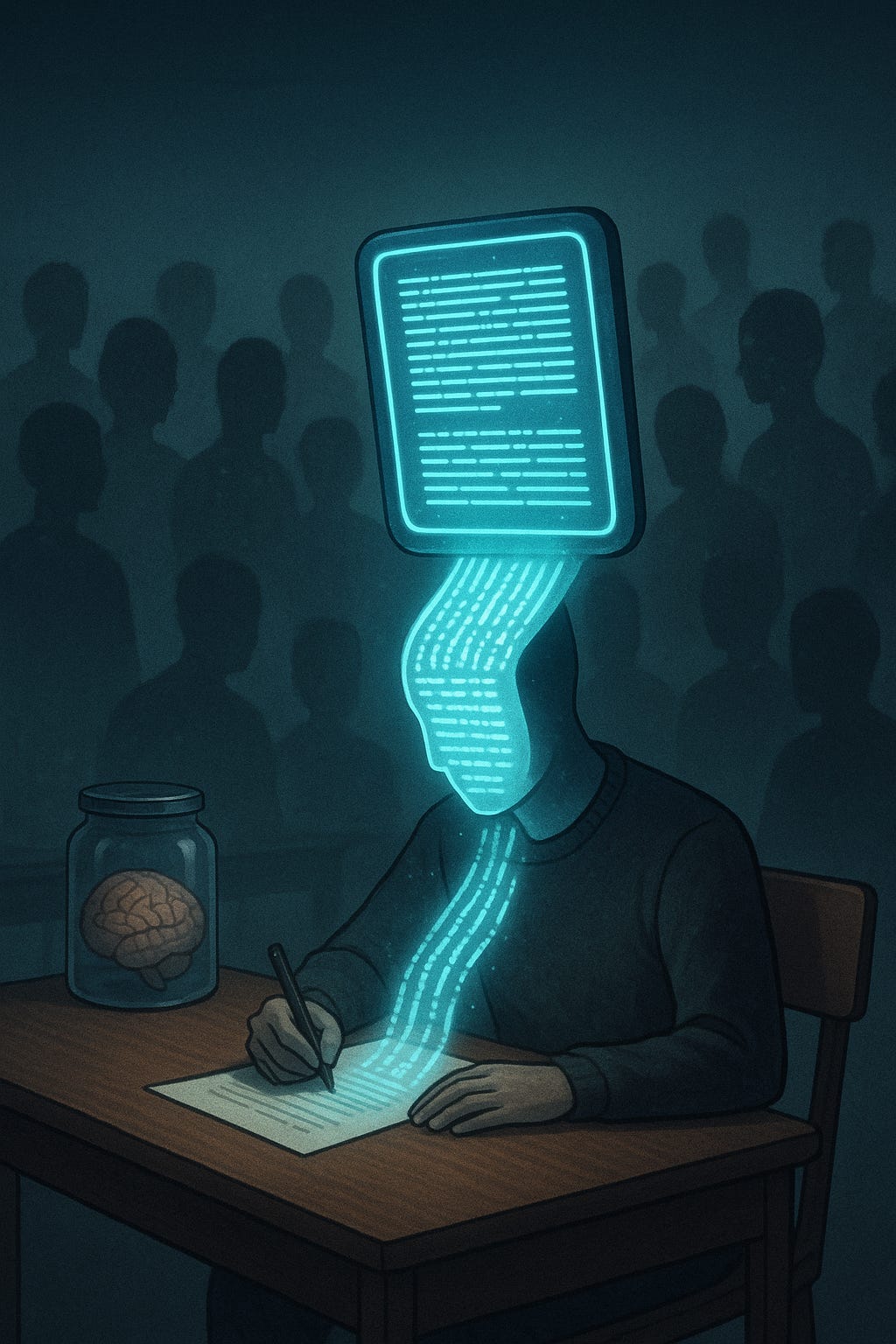

Outsourcing Thinking

As a professor, the most salient use of AI is in education, and specifically, cheating: students using AI to do their coursework for them. Coursework is a primary means by which we seek to ensure that our students are thinking about the course material; by outsourcing this work to machines, students undermine the whole purpose of their getting an education. Or, at least, my purposes, and what professors like me value in education. (See also Derek Thompson on The End of Thinking.)

One of the most interesting challenges of present-day AI is how it exposes the gaps between the values we would like people to have and the priorities that they really have. When everyone has a personal digital assistant that can adequately (if imperfectly) perform a wide range of tasks on demand, you soon learn what people really value. I love the idea of AI helping us to skip past the worthless boilerplate in life (corporate blather, etc.), freeing up time to focus on what really matters. I don’t have any concerns about my personal usage (though I probably underutilize it): I like thinking and writing about interesting things for myself, so am not at all tempted to “outsource” that! But academics may be unusual in this respect. Many others care much less about learning, reflecting, refining their ideas, developing critical thinking skills, and so on. Many, perhaps most, would rather get an instant fake credential and spend their time on other things instead. (Sad!)

So there seems a distressing tension here between my broadly liberal values, on which it’s good for people to get more of whatever they want, and my elitist/perfectionist values on which it’s good for people to get more of what will help them develop into better people. Ideally, the two would coincide, as people would value their own self-development. But maybe what we’re finding is that too many just… don’t?

Maybe this is too uncharitable, and most students do truly value education, but just feel that the stakes are too high to gamble their future economic prospects on it—especially if most of their peers appear to be cheating. Some might view outsourcing their thinking to AI as a kind of giving in to temptation rather than a reflection of their true values. It’s a bit hard to say how exactly to differentiate these two possibilities. (It’s not like there’s a “true values” box in their head that we could peek into to check.) Maybe they at least feel a bit guilty or conflicted, and agree on reflection that there’s an important sense in which getting a real education would be better for them. Perhaps those tempted to act against their true values would agree to structural changes that remove or mitigate the temptation. Whatever it precisely consists in, the “giving in to temptation” characterization certainly sounds more sympathetic than “doesn’t sufficiently care about education.”

Other cases of (AI-exacerbated) human misalignment?

There are obviously higher-stakes human misalignment risks to worry about: terrorists using AI to create bioweapons, authoritarians propping up their rule, etc. But, while acknowledging their importance, let’s put aside those sorts of extreme cases for now and focus more on the potential for more routine misalignment of the “everyman”.

Aside from education, the other big example I often see discussed is relationships, and worries that AI companions will sap people’s motivation to bother forming real human connections.

Are there other possibilities that worry you? Share your thoughts in the comments.

I worry that if AIs become conscious in the future most people will still be willing to use them in ways that are bad for them because it’s too useful andconvenient, even though the cost to the human is a much smaller cost than the cost to the AI. Factory farming and any intuitive grasp of human psychology already confirms that if you make people choose between their personal comfort and the suffering of entities that they don’t necessarily regard as similar enough to warrant consideration humans will pick their comfort every time. There are also a bunch of less serious, but more immediate concerns like that interacting with an AI will accustom a lot of humans not to seek social interaction or expect a lot more psychophancy, or that AI will prove addictive way social media is addictive and cause all the problems addiction normally causes. I am not sure you would include possibilities like mass automation causing social unrest which proves extremely disruptive and disastrous.

There's a painful and worrisome confluence of trends:

1) Students cheating on their education (worsening the already reversed Flynn effect)

2) Junior knowledge/administrative jobs being the first to get automated

And maybe

3) increasing support for authoritarianism, political violence and other illiberalism (education is protective for democracy)

Even if humans somehow maintain power through AGI & full automation of labor, the human electorate becoming less educated, intelligent, and liberal would suggest that that's not a very desirable trajectory either. It leads me to think we need some suite of technological interventions that make humans better. More curious, more altruistic, more intelligent. Could be drugs, computer brain interfaces, genetic enhancement of embryos..